The next 25 years of Google – here are its 7 most exciting future projects

For most of us, Google is the go-to tool for getting answers online. From essay questions to street directions, Google has become a byword for finding the information you need. With tools like Drive and Gmail, it’s also shaped the way we communicate and share in 2023. But there’s a whole lot more going on behind the scenes at Google: with a host of groundbreaking innovations in the pipeline, we’ve picked out our favorite future Google projects here.

Google turns 25

This is the part of a series of TechRadar articles marking and celebrating Google's 25th birthday. Read them all here.

Google began with an algorithm developed to better rank websites in search results. And since its inception in 1998, experimentation has never been far from the company’s agenda. So as it officially turns 25 today, it’s little surprise that Google continues to push the envelope when it comes to harnessing data and information.

From models that streamline the link between human commands and robot actions, to tools that can transform text prompts into music, Google’s working at the cutting edge of deep learning, machine learning and AI – and finding new ways to integrate the resulting potential into our daily lives.

As we know from previous projects, not every Google endeavor will see the light of day. But looking at the developments below gives us an exciting glimpse at what Google’s got in store for the next 25 years – and some might arrive sooner than you think…

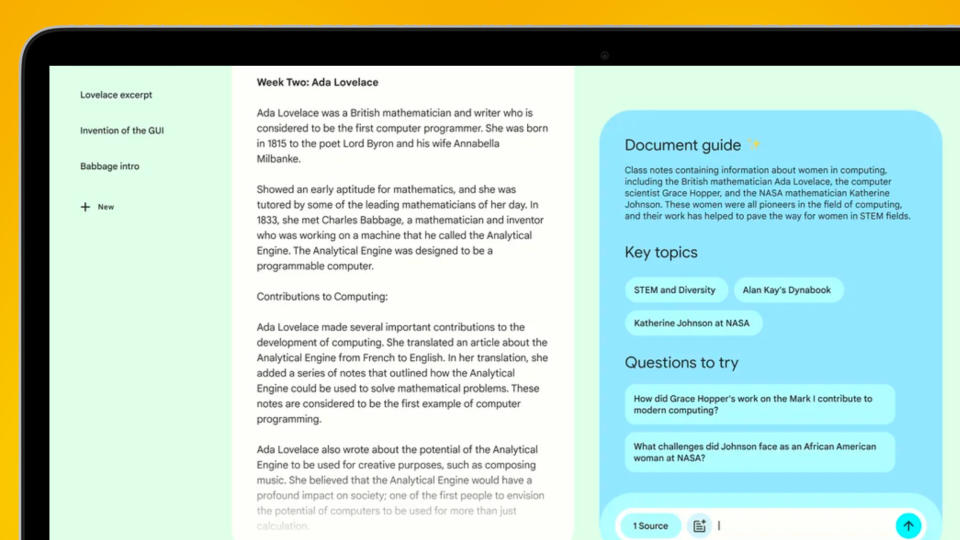

1. NotebookLM

Exciting for: anyone who deals with documents

Contextual awareness is a crucial step for AI tools, moving from broad-brush responses to highly specific feedback. NotebookLM promises to do that in a research context. Described by Google as a “virtual research assistant”, the experimental aid can summarize material, simplify complex ideas and suggest new connections. What sets it apart from today’s generative chat tools is that you can root its responses in your own notes.

Right now, users in the US can choose Google Docs as sources. NotebookLM will then use the power of its language models to create an automatic overview, helping you quickly get the gist of the topics in your sources. From there, you can ask it in-depth questions about the content to streamline your understanding. Arguably the most exciting element, though, is NotebookLM’s ability to create new ideas from your sources: Google gives the example of a founder working on a pitch, asking NotebookLM for the questions investors could ask.

Google cautions that you should still fact-check NotebookLM’s responses, although this is made easier by the fact that it cites each response to a source in your documents. Google’s planning to add support for more formats soon, which is where it could really catapult productivity. Instead of spending hours combing through a PDF to draw out specific information and trends, NotebookLM could give you that instantly.

2. AlphaFold

Exciting for: scientists who study molecular structures

Not everyone needs access to accurate predictions of protein structures. But for biological researchers studying diseases and the drugs that could treat them, AlphaFold is a game-changer. Developed by Google’s DeepMind unit, it uses deep learning to predict these protein structures based on their amino acid sequences – a challenging task that has previously proved problematic and time-intensive for scientists.

According to DeepMind, AlphaFold has predicted the structure of pretty much every protein currently known to science. That’s nearly 200 million. It cracked them in just 18 months, and with remarkable accuracy, too. With these protein structure predictions available for researchers to study, the outcome is a win for humanity. It’s not a golden bullet, but Google has given scientists a crucial boost in the fight against everything from malaria to plastic waste.

And there’s more in store for AlphaFold. Ongoing developments are expected to increase the accuracy of its findings. Researchers are also exploring how the tool could be applied to predict other molecular structures, which might mean big things for materials science.

3. RT-2

Exciting because: it’s helping robots to see

Teaching robots to see isn’t as simple as giving them eyes: seeing means understanding what you’re looking at – and that’s what Google’s RT-2 project is all about. Say you want to build a robot that can cook you eggs for breakfast. You can strap a camera to its head, but before it can get cracking, you need to teach it what an egg actually is.

The idea of RT-2 is to do this on a grand scale, with the first vision-language-action model. Trained using text and image data from the web, together with robotics data, its aim is to allow robots to process visual inputs and transform them into physical actions. In essence, robots using the RT-2 model should be able to understand what they’re looking at and what they should do about it.

The significance for robotics is potentially huge. It’s a development that better equips robots for contextual recognition and reasoning, taking us closer to a future where machines can operate independently of human supervision, whether that’s in homes, offices or hospitals. RT-2 is a work in evolution, but the pathway it opens is a remarkable – and to some people, scary – one.

4. Gemini

Exciting if: you use ChatGPT

OpenAI’s ChatGPT has catapulted generative language models into the mainstream, allowing almost anyone to obtain natural text responses from simple prompts. Gemini is Google’s rival to GPT-4 – and all signs point towards it levelling up on both capacity and capability.

Like GPT-4, Gemini will be multi-modal, so it will work with different data types – but it may go further than the text and image processing supported by GPT-4. That could mean graphs, videos and more. Google also says that Gemini will be future-proofed: it’s floated the potential for skills such as memory and planning, opening the door to more complex tasks.

Actually a network of models, Gemini channels the abilities of AlphaGo – the DeepMind program which famously became the first to beat a professional human Go player. Reinforcement learning played a key role in this, allowing it to improve decision-making over time. If Gemini builds on this approach, it could advance well beyond what GPT-4 can do. For now, it’s still in training, with only a select number of companies granted early access.

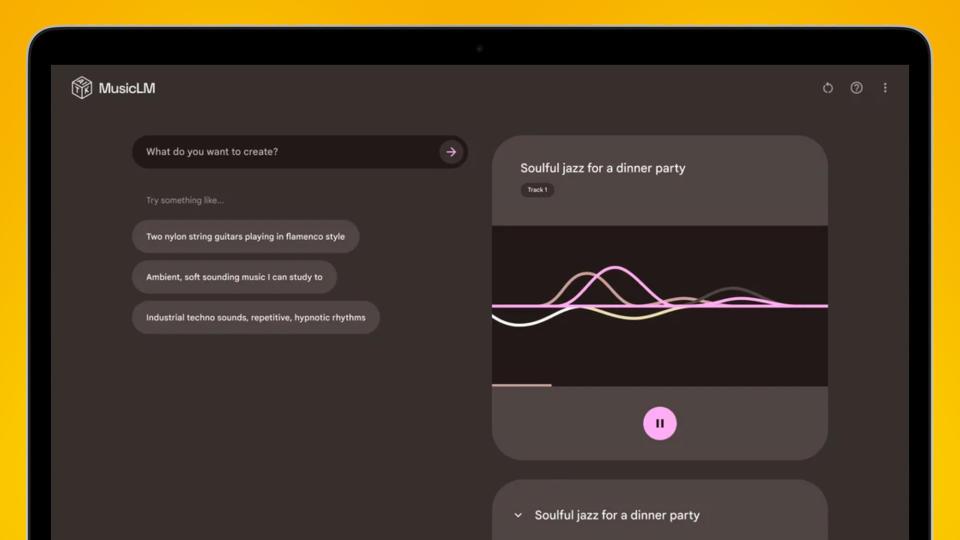

5. MusicLM

Exciting for: anyone with a song in their head

Proof that no medium is immune from the impact of AI, Google’s MusicLM tool lets you generate instant musical compositions based on straightforward text prompts. It’s available to try now through Google’s AI Test Kitchen on iOS, Android and the web. Simply type in your idea – such as, “ambient orchestral music for a walk in the countryside” – and it’ll create two tracks to match. You can then pick your favorite to help the model improve.

Much more than a musical plaything, MusicLM is already able to understand pretty complex requests, covering elements from tempo and instrumentation to genres, eras and locations. Its potential will only grow as its capabilities evolve, giving users increased control over the resulting soundtrack. Given the likelihood of a frosty reception from the music industry, Google is working with musicians to explore how MusicLM can enhance, not inhibit, creativity.

For composers, MusicLM might unlock the option to create an instant draft of a musical concept they have in mind, something which would previously have taken hours using professional software. Or it could allow songwriters to generate a quick backing track to test out a lyric. It could also inspire new musicians without any formal training.

6. Search Generative Experience (SGE)

Exciting for: anyone who searches using Google

Google made its name as a search engine, so it’s fitting that some of its most exciting developments are coming in that area. Search Generative Experience is Google’s vision of the future of search, using generative AI to supercharge results with contextual information. It’s like having ChatGPT help you with every query.

Currently, most Google searches return a list of relevant websites. Depending on your query, it’s down to you to join the dots. SGE changes that: it takes search terms as a prompt for a generative text answer, which is shown above standard search results. This invites you to interact through suggested links and questions, and allows you to phrase your own follow-up questions in an AI chat that’s context-aware.

Google is also working on a search tool that harnesses data from Google’s Shopping Graph to give real-time buying advice, covering products, reviews and pricing. It’s all experimental at present; you’ll need to sign up for Search Labs on Chrome desktop or the Google App for Android and iOS in the US to try it. But the promise is a more efficient, content-rich search experience that helps you get to the right answers faster.

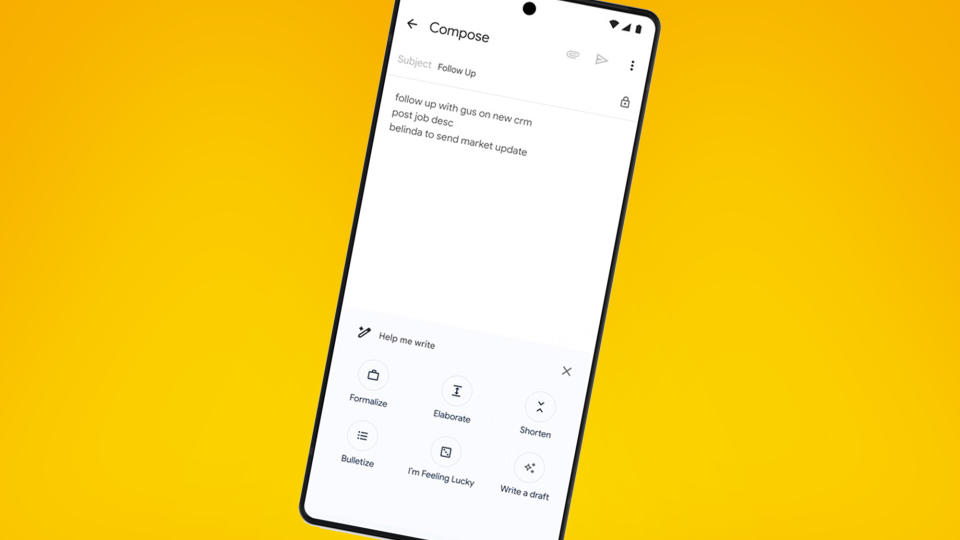

7. Duet AI

Exciting for: workplace productivity

The workplace of tomorrow will be built around AI, and some of the most powerful tools will be the least sexy – but also the ones that make you more productive by eliminating grunt work. That means tools that write your emails and proofread your documents, with options to adjust tone and style. Slide decks where you can request a generative background in just a few words, without hunting for a stock image. And spreadsheets that understand the context of data, to save you from countless hours of labelling.

That’s all coming to Google Workspace with Duet AI. While the tools themselves are impressively powerful, it’s arguably their execution in the context of existing Google products that mask them so exciting. You won’t need to learn how to use new platforms or interfaces; instead, you’ll find AI assistance woven into familiar places, including Gmail, Docs, Sheets, Slides and the like.

At a practical level, that’s the true power of AI for most professionals – making existing tasks quicker and easier to achieve, within the same digital setting. And this is just the start. You can bet that in another 25 years, getting things done with Google will look a whole lot different.

Yahoo Autos

Yahoo Autos