Autonomous Cars Could Improve Traffic Safety by Driving More Like Assholes

Tech company Intel says that autonomous vehicles could afford to take more risks while driving.

Artificial intelligence systems in most driverless test vehicles can cause the car to behave like an anxious human driver.

Intel's Responsibility-Sensitive Safety (RSS) program is meant to help autonomous vehicles act more like assertive human drivers.

Here's something you don't often hear: Driverless cars are too obsessed with safety. But Intel and its subsidiary Mobileye think automated vehicles (AVs) should relax and take more risks, so they've developed a program called Responsibility-Sensitive Safety (RSS) to make AVs act more like human drivers. We know what you're thinking: Humans are terrible drivers. But Intel says more assertive AVs will make for safer, freer-flowing traffic.

Typical AVs use artificial intelligence (AI) to make decisions, relying on constant calculations to determine the probability of a crash. The problem is, they only like to make movements when that probability is very low, much like an anxious driver who waits too long to make a left turn and misses the gap. This causes a certain amount of AV paralysis and passenger frustration.

But RSS differs from AI in that it's deterministic, not probabilistic. The system provides an AV with a playbook of preprogrammed rules that define safe and unsafe driving situations. Rather than playing it ultraconservative like most AVs, RSS allows the car to make more-assertive maneuvers, right up to the line that separates safe and unsafe. Much like a human driver, an RSS-equipped AV knows a crash is possible even if it merges onto the highway at the correct speed, but it won't dissolve into inaction based on the small chance that another car will misbehave.

Intel hasn't yet worked with insurance companies or regulators to formalize RSS's role in assigning fault after crashes, but the system could be one tool to help investigators determine the cause of an AV-involved incident. Equipment can fail, sensors can incorrectly interpret the world around them, and human drivers will certainly collide with AVs, says Jack Weast, vice president of autonomous vehicle standards at Mobileye. "Accidents will happen," he says. "It could be the AV, it could be the human-driven car, but you need to know who did what, why, and when."

Trolley Problems

Intel doesn't claim that RSS is infallible. Crashes could still happen as a result of equipment failures, sensor malfunctions, and, of course, human error. And an RSS-equipped AV won't avoid one accident if doing so would create another. It is, however, highly vigilant and sometimes more assertive than you'd expect a robot to be.

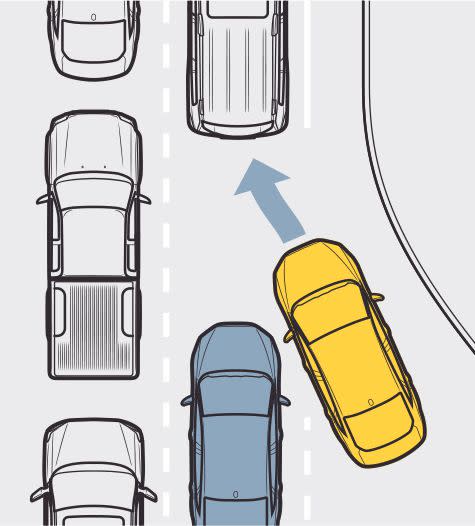

Scenario 1: Traffic is deadlocked, and human drivers won't let the AV merge. RSS permits the car to assert itself by creeping into the lane, pressuring the car behind to make an opening. If that car refuses to stop, though, the AV will abort this plan and move off to the side until it can safely enter the lane.

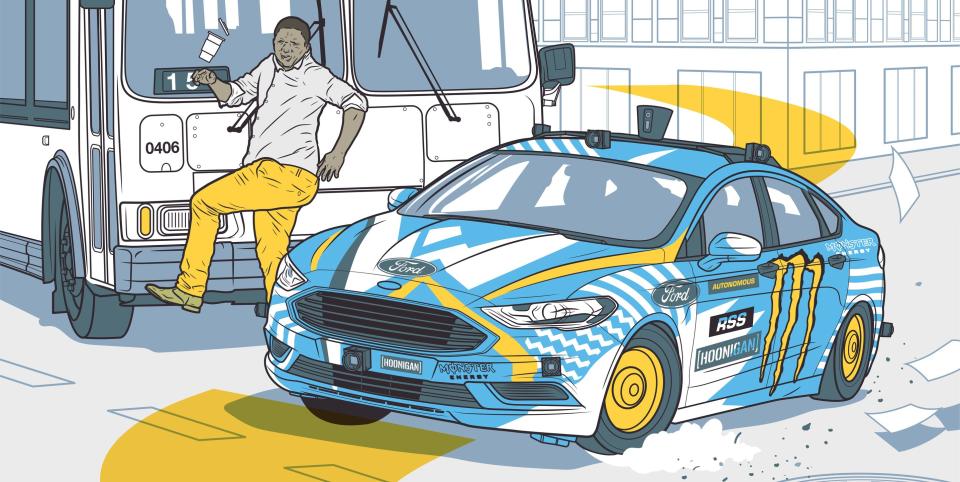

Scenario 2: A bus shelter blocks the AV's view of pedestrians waiting at a crosswalk. RSS allows the car to proceed with caution, having a certain expectation of how fast people typically move. The AV should be able to stop in time to avoid a collision with an errant crosswalker.

Scenario 3: A human driver in the next lane isn't paying attention to the road and begins to drift over the line into the AV's path. Sensing this intrusion, RSS would direct the AV to perform an evasive maneuver (such as braking) to avoid a collision, much as a wary driver might.

From the April 2019 issue

('You Might Also Like',)

Yahoo Autos

Yahoo Autos