NTSB gets involved in Tesla Autopilot probe

When it comes to auto-related investigations, the National Highway Traffic Safety Administration is usually the first--and often, only--agency on the scene. Consumers complain about a vehicle's performance, something goes wrong with an important component, and NHTSA fields the call.

But sometimes, the National Transportation Safety Board gets involved. The investigation of a last year's fatal crash involving comedian Tracy Morgan was one such instance, and the May 7 collision involving a Tesla Model S in Autopilot mode is another.

READ: Honda HR-V, Jeep Wrangler, Nissan Rogue, and other small SUVs have poor headlights, says IIHS

Though the NTSB has the right to investigate traffic accidents anytime it likes, the agency usually limits itself to incidents involving airplanes, trains, and ships. What makes the Tesla Model S crash special is that it's the first fatal collision involving semi-autonomous software in a passenger car.

Unlike NHTSA, the NTSB can't impose fines or other penalties, but it can make recommendations about transportation policy. In this particular case, NHTSA may be inclined to take the NTSB's recommendations to heart.

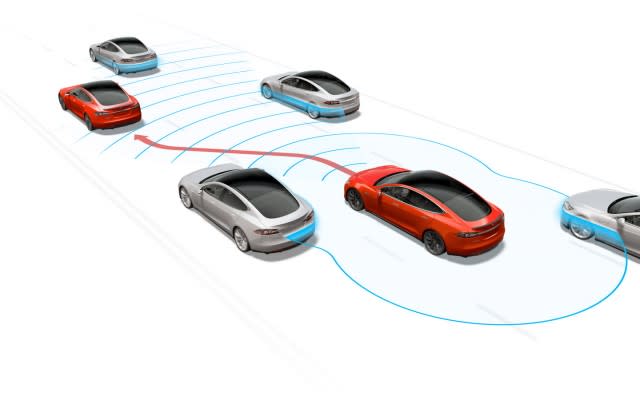

That's because we're barreling toward a future full of autonomous and semi-autonomous cars, trucks, and SUVs, and NHTSA wants to be proactive in dealing with the issues associated with them. The NTSB is very familiar with self-driving vehicles, because airplanes have been using autonomous software for years. (That's why we speak of "autopilot" and not "autodriver".)

And so, a great deal could hang on the NTSB probe. Some of the questions the agency's five investigators hope to answer include:

1. What are Autopilot's shortcomings? Yes, Tesla drivers have safely logged over 130 million miles in Autopilot mode. But as the Florida collision demonstrated far too clearly--and as Tesla has plainly said--Autopilot isn't perfect. That raises the question: what needs to be done to make Autopilot better? Under what conditions should it be used? When should it be disabled or avoided?

2. Does Autopilot make drivers too complacent? When cars are fully autonomous, drivers won't need to pay attention to the road. Until that day arrives--and it won't arrive for decades--drivers of autonomous and semi-autonomous vehicles will need to remain vigilant. They'll need to account for the actions of drivers in non-autonomous vehicles, not to mention safety hazards like roadway construction and distracted pedestrians. How can automakers like Tesla ensure that features like Autopilot avoid lulling drivers into a false sense of security?

3. Should automakers be allowed to beta-test driving software on public roads with private owners? It's possible to envision software that might be worth beta-testing with consumers--for example, infotainment apps. But Autopilot seems like a very different class of software--one that perhaps ought to be out of beta before it's given to everyday drivers.

We'll keep you posted about the NTSB's findings and its recommendations when they become available.

When it comes to auto-related investigations, the National Highway Traffic Safety Administration is usually the first--and often, only--agency on the scene. Consumers complain about a vehicle's performance, something goes wrong with an important component, and NHTSA fields the call.

But sometimes, the National Transportation Safety Board gets involved. The investigation of a last year's fatal crash involving comedian Tracy Morgan was one such instance, and the May 7 collision involving a Tesla Model S in Autopilot mode is another.

READ: Honda HR-V, Jeep Wrangler, Nissan Rogue, and other small SUVs have poor headlights, says IIHS

Though the NTSB has the right to investigate traffic accidents anytime it likes, the agency usually limits itself to incidents involving airplanes, trains, and ships. What makes the Tesla Model S crash special is that it's the first fatal collision involving semi-autonomous software in a passenger car.

Unlike NHTSA, the NTSB can't impose fines or other penalties, but it can make recommendations about transportation policy. In this particular case, NHTSA may be inclined to take the NTSB's recommendations to heart.

That's because we're barreling toward a future full of autonomous and semi-autonomous cars, trucks, and SUVs, and NHTSA wants to be proactive in dealing with the issues associated with them. The NTSB is very familiar with self-driving vehicles, because airplanes have been using autonomous software for years. (That's why we speak of "autopilot" and not "autodriver".)

And so, a great deal could hang on the NTSB probe. Some of the questions the agency's five investigators hope to answer include:

1. What are Autopilot's shortcomings? Yes, Tesla drivers have safely logged over 130 million miles in Autopilot mode. But as the Florida collision demonstrated far too clearly--and as Tesla has plainly said--Autopilot isn't perfect. That raises the question: what needs to be done to make Autopilot better? Under what conditions should it be used? When should it be disabled or avoided?

2. Does Autopilot make drivers too complacent? When cars are fully autonomous, drivers won't need to pay attention to the road. Until that day arrives--and it won't arrive for decades--drivers of autonomous and semi-autonomous vehicles will need to remain vigilant. They'll need to account for the actions of drivers in non-autonomous vehicles, not to mention safety hazards like roadway construction and distracted pedestrians. How can automakers like Tesla ensure that features like Autopilot avoid lulling drivers into a false sense of security?

3. Should automakers be allowed to beta-test driving software on public roads with private owners? It's possible to envision software that might be worth beta-testing with consumers--for example, infotainment apps. But Autopilot seems like a very different class of software--one that perhaps ought to be out of beta before it's given to everyday drivers.

We'll keep you posted about the NTSB's findings and its recommendations when they become available.

Yahoo Autos

Yahoo Autos